Setting up a semantic search functionality is easy using Langchain, a relatively new framework for building applications powered by Large Language Models.

Semantic search means performing a search where the results are found based on the meaning of the search query. This is in contrast to a simple keyword search, which will only find results that match exactly to the search term entered. A good example of what semantic search enables is that if we search for “car”, we can not only retrieve results for “car” but also “vehicle” and “automobile”.

The underlying process to achieve this is the encoding of the pieces of text to embeddings, a vector representation of the text, which can then be stored in a vector database. When a search is performed, the search term is also encoded into it’s embeddings and the closest vector representations is searched for in our database.

In this post, we will spin up a vector database (Elasticsearch), index a topic from Wikipedia to store there and then perform some semantic searches to get answers on the topic. OpenAI will be used to perform the text embeddings, however there are many other models supported by langchain that we could use. I’ve purposefully tried to keep things as simple as possible for this particular example.

To start, set up a Docker container running Elasticsearch:

docker run -p 9200:9200 -e "discovery.type=single-node" -e "xpack.security.enabled=false" -e "xpack.security.http.ssl.enabled=false" docker.elastic.co/elasticsearch/elasticsearch:8.9.1Some text should start getting output to the console. Make sure to keep the container up and running.

Open up another terminal window and install Langchain:

npm install langchainWe will write our first python script that:

- Downloads some content from any webpage — in this case, Wikipedia

- Splits the content into n-token sized chunks (documents)

- Indexes and stores the data into the vector database that we just started using Docker

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores.elasticsearch import ElasticsearchStore

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.document_loaders import WebBaseLoader

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.vectorstores.elasticsearch import ElasticsearchStore

loader = WebBaseLoader("https://en.wikipedia.org/wiki/Twin_Peaks")

data = loader.load()

text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=100, chunk_overlap=0

)

documents = text_splitter.split_documents(data)

embedding = OpenAIEmbeddings(openai_api_key=[OpenAI API Key])

db = ElasticsearchStore.from_documents(

documents=documents,

es_url="http://localhost:9200",

index_name="twin_peaks",

embedding=embedding,

strategy=ElasticsearchStore.ExactRetrievalStrategy()

)

- Some variables in the code to play around with here are chunk_size (the number of tokens to split the content into), chunk_overlap (if any of the tokens should overlap per chunk)

Our second python script will:

- Connect with the vector database

- Take some search queries, generate embeddings from them and then look for the most similar embeddings in the vector database to give us the most relevant answers

- Output the results

from langchain.vectorstores.elasticsearch import ElasticsearchStore

from langchain.chains import RetrievalQA

from langchain.chat_models import ChatOpenAI

from langchain.vectorstores.elasticsearch import ElasticsearchStore

from langchain.embeddings.openai import OpenAIEmbeddings

embedding = OpenAIEmbeddings(openai_api_key=[OpenAI API Key])

db = ElasticsearchStore(

es_url="http://localhost:9200",

index_name="twin_peaks",

embedding=embedding,

strategy=ElasticsearchStore.ExactRetrievalStrategy()

)

qa = RetrievalQA.from_chain_type(

llm=ChatOpenAI(temperature=0),

chain_type="stuff",

retriever=db.as_retriever()

)

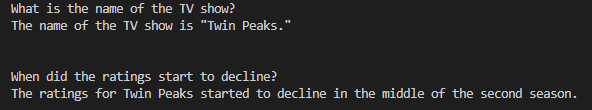

question = "What is the name of the TV show?"

answer = qa.run(question)

print("{0}\n{1}\n\n".format(question, answer))

question = "When did the ratings start to decline?"

answer = qa.run(question)

print("{0}\n{1}\n\n".format(question, answer))

- Make sure to add your own OpenAI API Key, ideally using an environment variable instead of hard-coding it

- We can try adjusting the temperature parameter, which defines how much randomness should be in the output

- The chain_type parameter defines the type of document combining chain to use. “Stuff”, or stuffing is the simplest method, but it may be worth playing around with some of the other options as well. More information can be found here: https://docs.langchain.com/docs/components/chains/index_related_chains

It really is as simple as that. Using Langchain, we can also index content from files or a database. A number of other vector databases such as Pinecone, Prisma, Supabase and FAISS are also supported. The framework offers a set of abstractions that make working with large language models very easy, so I think it’s definitely worth checking out, especially if you are just starting to play around with LLMs. What’s covered here is only an very small piece of what Langchain can offer. It’s core purpose is for “chaining” together different components to support more advanced use cases when working with LLMs, whether that be conversational chatbots or autonomous agents that can follow a set of sequential instructions and work towards an outcome.

This basic example can be expanded into a back-end that indexes more data and exposes an API for performing the semantic searches which we can then integrate into an application.